Introduction

Emotions are central to the human experience and reflect our existence as sentient beings. Not only do emotions inform our reasoning and guide others to understand us; they now guide Emotional AI systems to decipher how we feel. The term Emotional AI is borrowed from Professor Andrew McStay and refers to technologies capable of 'interpret[ing] feelings, emotions, moods, attention and intention in private and public places' (McStay, 2018, 1). This paper assesses the existing, albeit premature, implementations of the technology and the interwoven impacts on society.

Noah Levenson's talk at the Tate Exchange in September 2019 propelled my research into the field. Titled Stealing Ur Feelings, Levenson's talk led me to discover Affective Computing. Defined as 'computing that relates to, arises from, or deliberately influences emotions' (Picard, 1997) for the scope of this essay, the term Affective Computing is employed interchangeably with Emotional AI.

In order to understand how technology can interact with our emotions I will begin by illustrating a few ways in which we share our emotions with machines. The smartphone era has accustomed us to sharing our emotions digitally – both online and through apps. Machines are an integral part of how we communicate and perhaps now more than ever, during the Covid-19 pandemic, we rely on our various devices to remain socially connected. Most of us engage daily with a vernacular of emojis as an instant reflection of sentiments and perhaps our five most recently used emojis can be seen as an indication of our moods that day [fig.1].

Figure 1: Draganić, D. (2020) Frequently Used Emojis [Screenshot] Chronological order of my most recent emojis.

Figure 1: Draganić, D. (2020) Frequently Used Emojis [Screenshot] Chronological order of my most recent emojis.

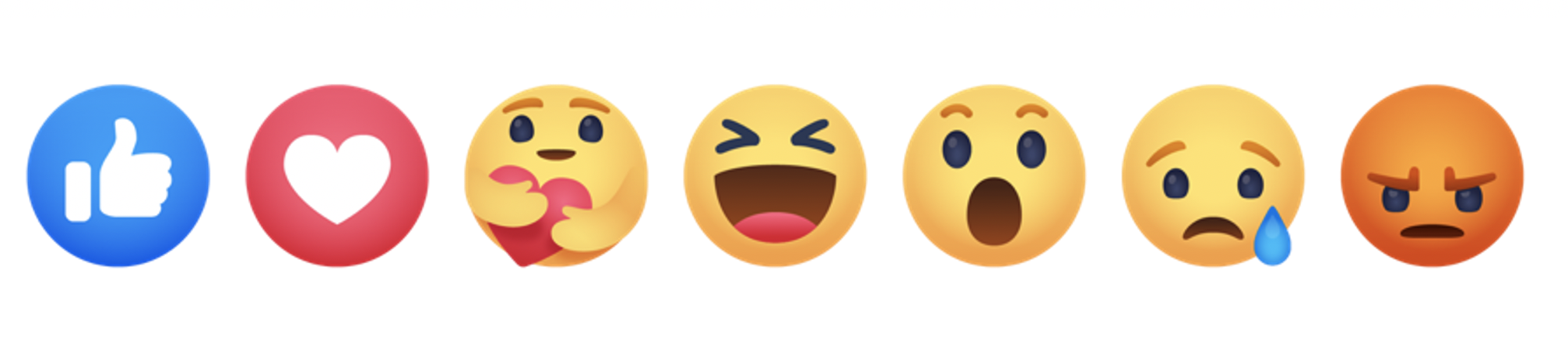

'Non-verbal, shorthand communication' (McStay, 2018, 1) extends beyond emojis and is provided by apps to give us the choice to emotionally engage with content. For example, Reactions by Facebook allows users to respond to posts with several varied emotions [fig.2]. This 2016 feature replaced the monotonous Like button to give users more scope to express their emotions online. Similarly, dating app Tinder, has the option to Super Like someone – a superlative of the right swipe – indicative of a definite interest in a potential match. These prescribed templates are a universal way for users to share how they feel online; but they lack the nuances of a furrowed brow, or the joyous intimacy of a beaming grin.

Although the face is not determinant of all emotions, it is the most expressive aspect of human presence according to Emmanuel Levinas. Levinas posits "What we call the face is precisely this exceptional presentation of self by self" (Levinas, 1961,202). Thus, as the face 'direct[s] the trajectory of a social interaction' (Meyers, 2018) it is ubiquitous for Emotional AI systems to accelerate valuable human interactions with machines. For clarification, an Emotional AI system cannot experience emotions however it can detect emotions, classify them and respond appropriately, thus offering 'the appearance of understanding' (McStay, 2018, p. 3). The process of naming, categorising and measuring faces holds immense value in a world where we communicate extensively through machines.

Figure 2: Draganić, D. (2020) Facebook’s Reactions [screenshot] I interpret these as: Like, love, happiness, joy, surprise, sadness, anger.

Figure 2: Draganić, D. (2020) Facebook’s Reactions [screenshot] I interpret these as: Like, love, happiness, joy, surprise, sadness, anger.

This paper inspects the pervasive impact of Emotional AI through various stages of development and implementation. I begin by describing the historical context of recognising emotions from facial expressions and the relevance to the field of Computer Vision. The first section, Diminishing Dignity, assesses how Emotional AI affects the individual, arguing that emotions are not generic and therefore irreducible by a machine. Magic Matters tackles opacity prevalent in patent parlance and EU regulations on non-identifiable data. This paper then urges for critical thought beyond ourselves to evaluate the impact of algorithmic bias in law enforcement and on intersectional groups. I end by critiquing a broadly utilitarian vision of AI and emphasise the importance of mitigating bias on both technological and social levels.

The AI industry can feel elusive, misconceived as a pernicious force reserved for computer scientists. My writing does not serve to raise a technophobic alarm, rather it is a way to begin to understand the nascent field of Emotional AI and the interwoven impacts on society.

Timeline of Technology

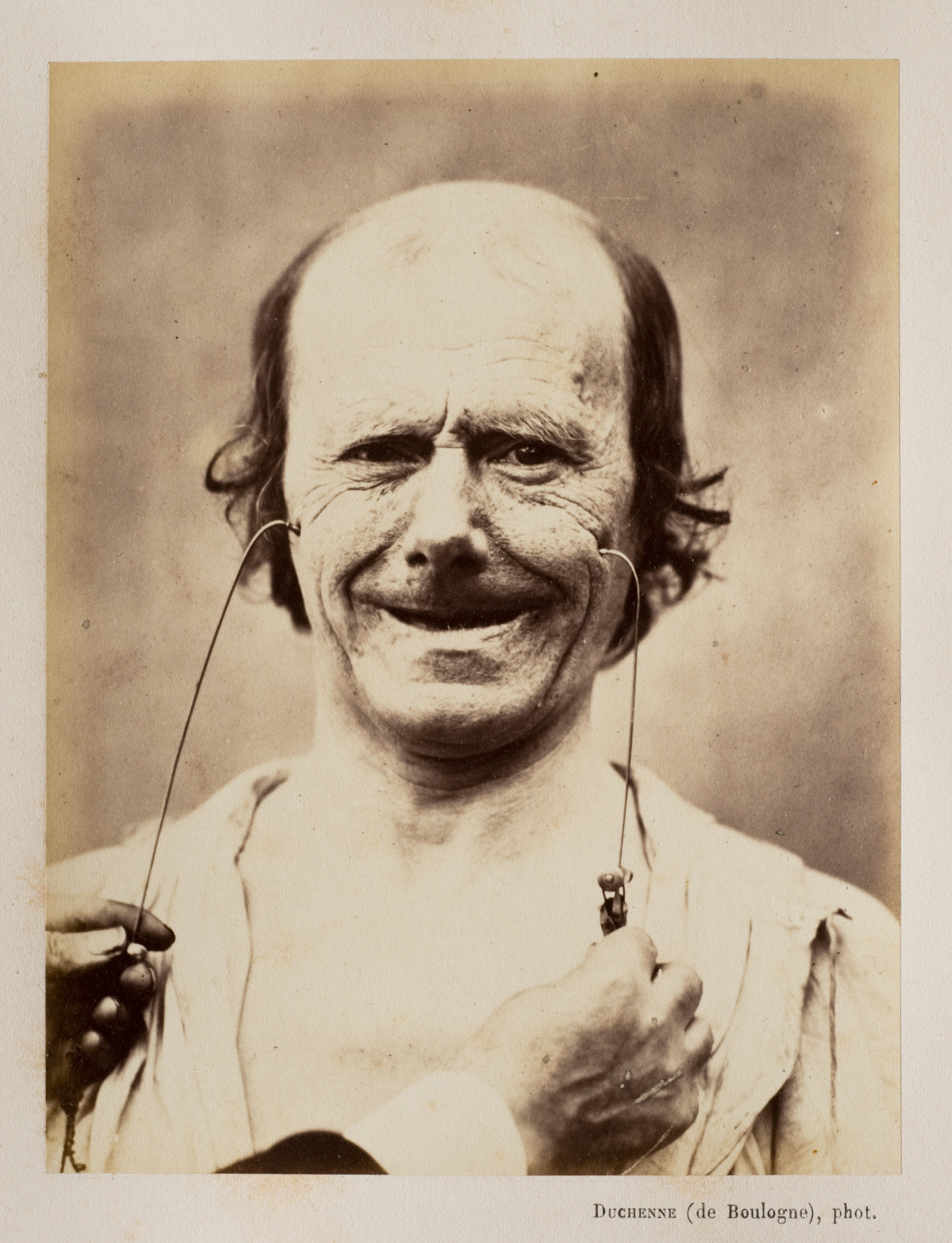

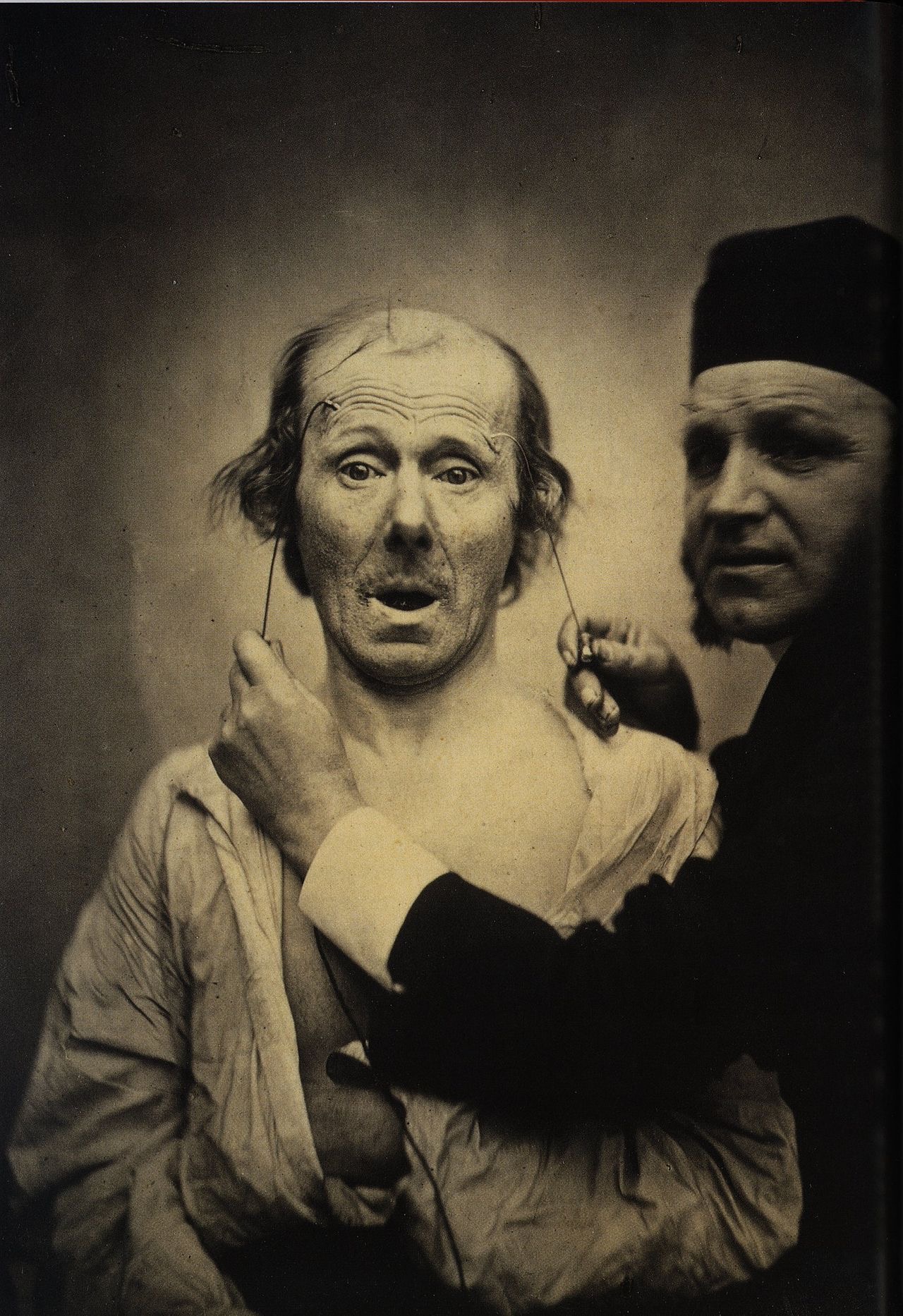

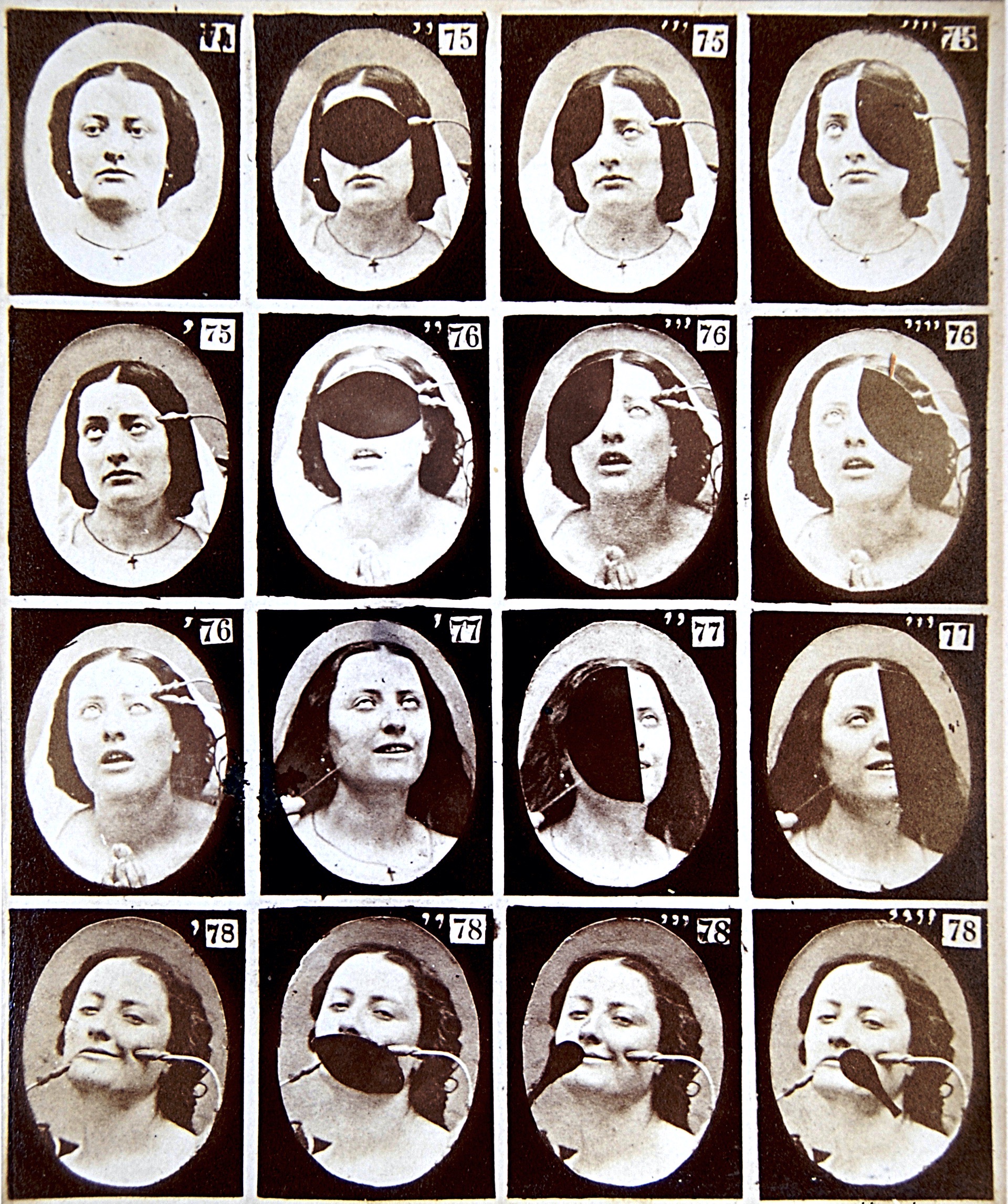

In order to dissect the contemporary implications of Emotional AI we must acknowledge the historical context. Although embryonic in terms of implementation, the origins of the technology employed in Emotional AI lie in 19th century developments in neurology and psychology. 'Armed with electrodes' (Ekman and Boulogne, 1990, 9), French neurologist, Duchenne de Boulogne, stimulated the face to trigger facial expressions which he documented extensively through photography [fig.3]. His experiments identified over 60 facial expressions and concluded that emotions are universally expressed by humans [fig.4]. Duchenne believed these expressions to be a reflection of our soul - a creation from God. His research provided the groundwork for Charles Darwin to publish The Expression of the Emotions in Man and Animals (1872). Although Darwin doubted the veracity of Duchenne's findings, he acknowledged that 'No one has more carefully studied the contraction of each separate muscle [than Duchenne]' (Darwin, 1872, 5). Darwin confirmed the universality of emotions and 'wondered whether there might…be a fewer set of core emotions that are expressed…across cultures.' (Snyder et al., 2010) These core emotions include happiness, fear, sadness, surprise, anger and contempt.

Figure 3: Le Mécanisme de la Physionomie Humaine (ca 1876)

Figure 3: Le Mécanisme de la Physionomie Humaine (ca 1876)

Figure 4: Le Mécanisme de la Physionomie Humaine (ca 1876)

Figure 4: Le Mécanisme de la Physionomie Humaine (ca 1876)

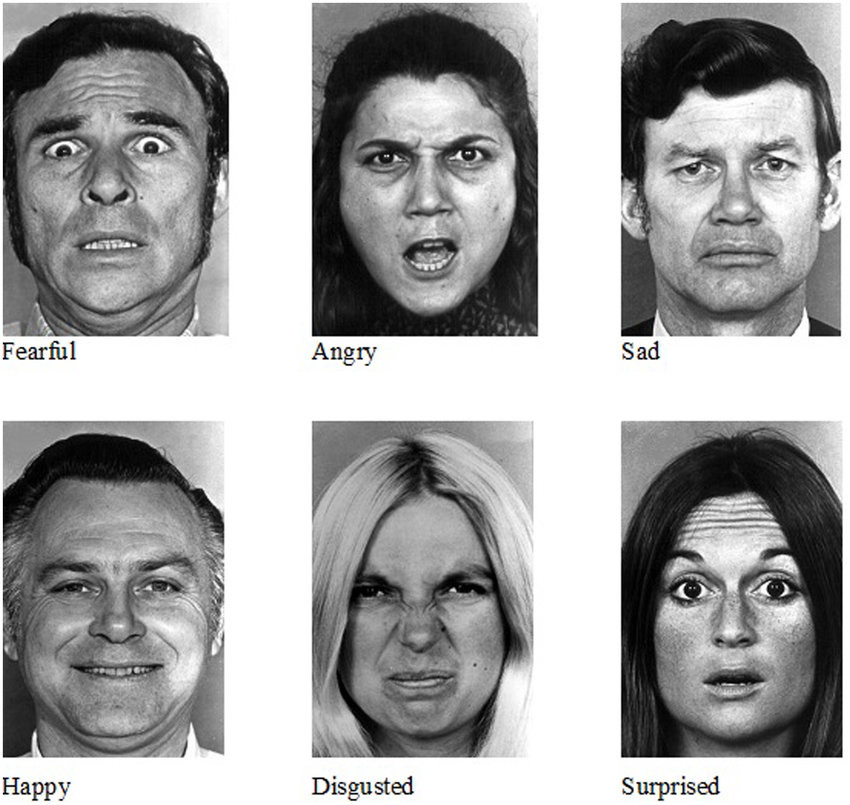

A century later, American behaviouralists Paul Ekman and Wallace Freisen, developed a technique for 'quantifying and measuring all the movements the face can make.' (Paul Ekman Group, 2018). Their FACS method (Facial Action Coding System) observed micro-expressions which extend beyond the core emotions aforementioned. Micro-expressions are fleeting and involuntary - for example, a brief furrow of an eyebrow or a brisk wrinkle of the nose. Ekman borrowed extensively from Duchenne (Ekman and Boulogne, 1990, 282) to compile a database of images and labels [fig.5]. The diligence of Ekman's FACS database fascinated the Computer Vision community. The database seemed perfect to train an algorithm to recognise human expressions. Current emotion recognition technology relies on a combination of FACS and Bayesian networks. Although beyond the scope of this essay, a Bayesian network is a method of calculating the probability of a facial expression. Thus the human anatomy and emotions have become machine readable (McStay, 2018, 3).

Figure 5: Ekman-based approach to classifying and labelling facial expressions.

Figure 5: Ekman-based approach to classifying and labelling facial expressions.

Diminishing Dignity

Our faces display around 7000 expressions a day (Pantic, 2018). Each one reflects our existence as sentient beings, yet self-proclaimed "camera company" Snapchat, recognises the human face as an object - and not a face. Considering the face as an object to be measured and categorised by an algorithm diminishes our living presence to a numerical value. By critiquing the hegemonic Ekman-based approach to understanding and classifying emotions, I argue that genericising the human experience sees the erasure of human dignity.

The idea of human dignity is precious; understood as an inherent worth1 shared by humans. Arguably as technology progresses, so does our understanding of dignity (O'Brien, 2014, 34) but for the purpose of this essay, dignity assumes a humanistic stance. The humanistic stance affirms that an individual is complex and multidimensional; incomparable to an unexceptional object. Article 1 of the United Nations Declaration of Universal Human Rights (1948) states "All human beings are born free and equal in dignity and rights. They are endowed with reason and conscience…" (The United Nations, 1948). A protection of this human dignity is an ethical concern which lies at the root of differentiating human beings from insentient objects.

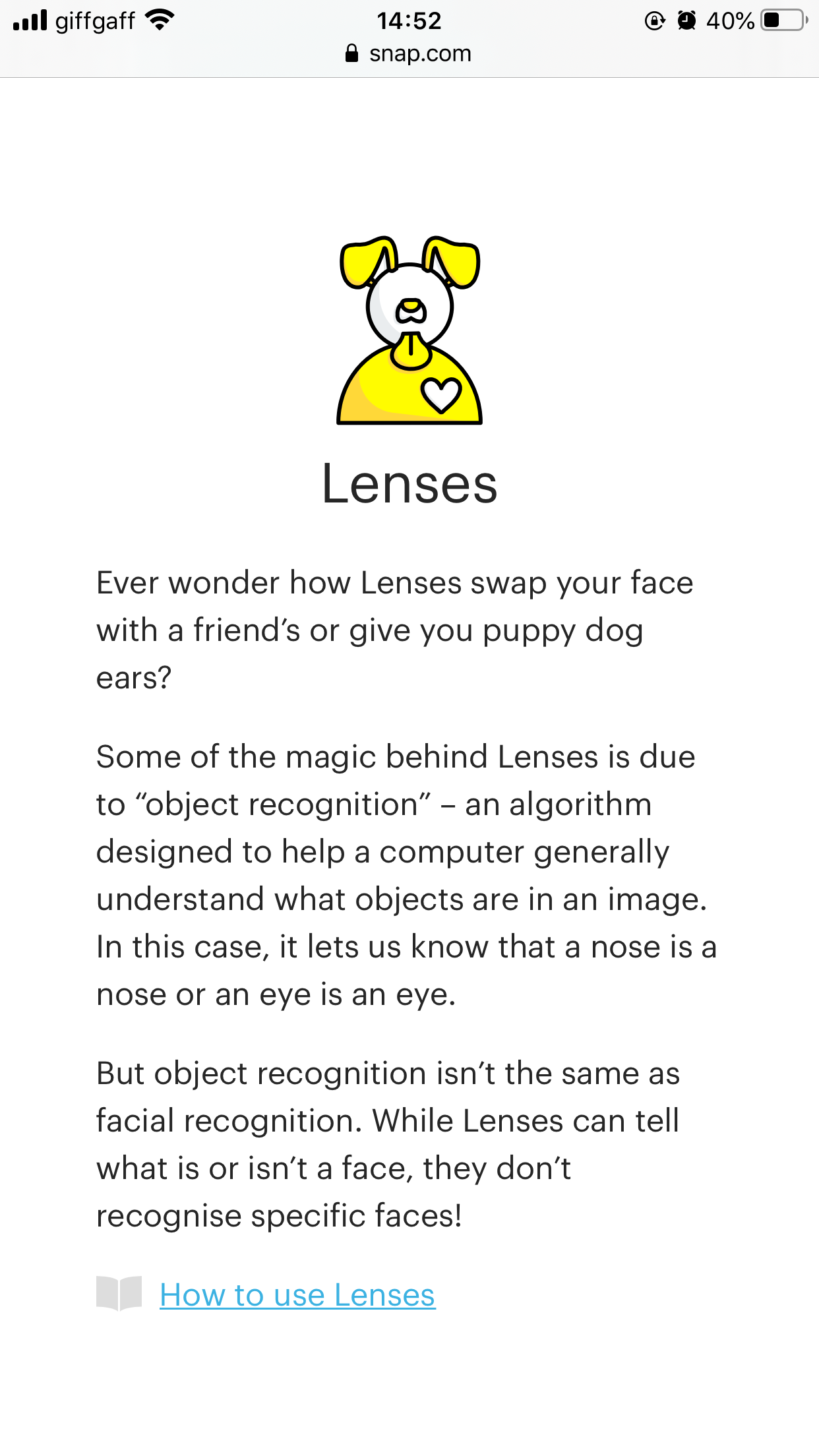

Thus, in quantifying and objectifying the faces of users, Snapchat challenges human dignity. The company's Online Privacy Centre states: 'some of the magic…is due to Object Recognition…it lets us know that a nose is a nose or an eye is an eye.' (Snap Inc, 2020). A few lines later an accurate distinction is made: 'Object Recognition isn't the same as Facial Recognition' (Snap Inc, 2020). Although correct on a technological level, the observation is concerning when dealing with matters of the human experience. How can Snapchat refer to our face - and therefore our human experience - as an object? This objectification is reminiscent of Duchenne's electrophysiological experiments where he appeared as a puppet master; manipulating the expressions of his models. The genericism of labelling facial muscles allows for the face to be 'measured by an ideal, standardised scale' (Hu and Papa, 2020, 144). Thus, the hegemonic demands of Emotional AI allow for our emotions to be reduced to a numerical value.

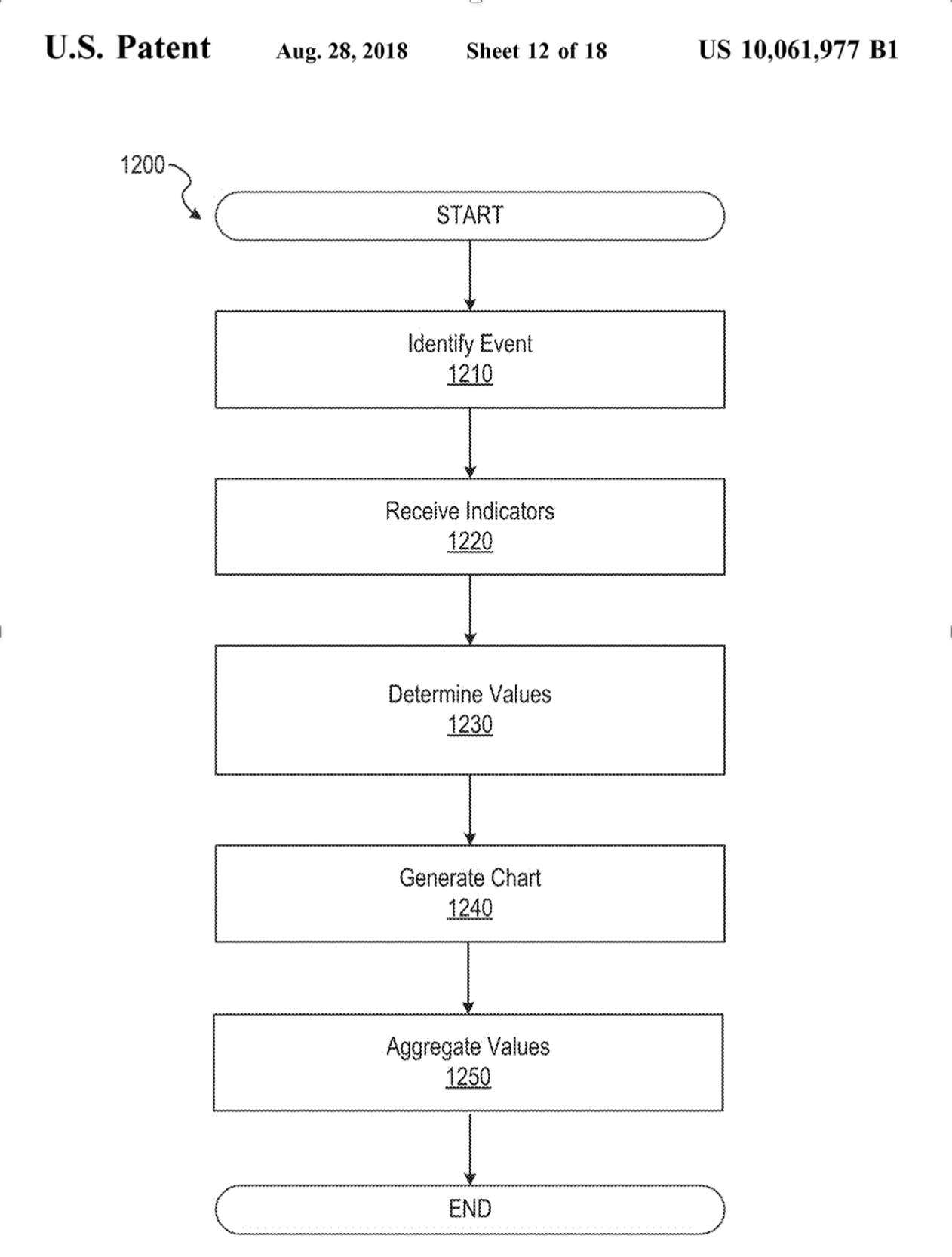

This parsing of human experience is directly evident in a Snapchat patent filed in 2015. The patent Determining a mood for a group (Chang, 2015) details the process of detecting, measuring and quantifying the emotions of users. The patent embodies Ekman's intentions of 'quantifying and measuring all the movements the face can make.' (Paul Ekman Group, 2018). The patent diminishes the human experience to an integer - an undeniable form of diminishing human dignity.

The intention of Snapchat software extends beyond 'swap[ping] your face with a friend's or giv[ing] you puppy dog ears' (Snap Inc, 2020).

The app employs a 5 step system as follows [fig.6]:

- Detect the presence of 2 or more people in an image or video.

- Identify 1 or more emotions by looking at positions of key features such as the lips and eyebrows.

- Assign a numerical value to the detected emotion.

- Calculate an overall group emotion by taking the most common emotion recognised.

- Generate a chart of emotional data to be shared with third parties.

Figure 6: Diagram from patent showing the 5 steps of Snapchat's Emotional AI system. Chang, S. (2015). Determining a mood for a group. United States Patent no: US 10,061,977 B1. Available at: https://patents.google.com/patent/US10061977B1/en [online][accessed: 13 October 2020].

Figure 6: Diagram from patent showing the 5 steps of Snapchat's Emotional AI system. Chang, S. (2015). Determining a mood for a group. United States Patent no: US 10,061,977 B1. Available at: https://patents.google.com/patent/US10061977B1/en [online][accessed: 13 October 2020].

These 5 steps summarise Snapchat's crude testing for emotions. Emotions are aggregated and fused into a numerical value hence the patent itself reveals the roots of the erasure of human dignity. Of course, the purpose of reducing our human experience to an integer is for profit. Although the focus is on the impact of Emotional AI on the individual rather than why Snapchat employs Emotional AI, it is important to acknowledge the term Surveillance Capitalism, coined by Shoshana Zuboff (2018) and defined as:

- A new economic order that claims human experience as free raw material for hidden commercial practices of extraction, prediction, and sales. (Zuboff, 2018)

This specific Snapchat patent has been active for over two years yet there is no clear mention of it in the company's Privacy Policy where the following is stated:

We may also share with third parties that provide services to us or perform business purposes for us aggregated, non-personally identifiable information. (Snap Inc, 2019)

The parlance alludes to that of the patent therefore we can assume that is what the company is loosely referring to. The statement itself embodies the notion of Surveillance Capitalism. Our human experience is covertly reduced to a monetary transaction; our emotions associated with a price–tag. Philosopher and ethicist Immanuel Kant (1724–1804) distinguished the notion of dignity from price. Kant argued that while the worth of an object can be measured in terms of values such as economic worth, a human – who is intrinsically worthy – cannot have a price. 'Everything has either a price or a dignity' (Kant, 1997, 42) thus as humans with dignity – "endowed with reason and conscience" (The United Nations, 1948), we are non-quantifiable, we cannot have a price. So, as Snapchat covertly assigns users with a monetary value, our dignity is diminished.

Magic Matters

Snapchat appears transparent about their use of Object Recognition, however, the use of the word 'magic' [fig.7] to summarise the process of our objectification is problematic. As established in Diminishing Dignity, the fivesteps behind Snapchat understanding user's emotions are not magic at all. This popular trope fuels the mystification of technology which denies parts of society access to proper information. Magic Matters reasons that opacity surrounding Emotional AI sees the erasure of the individual. Science fiction writer Arthur C. Clarke auspiciously wrote 'Any sufficiently advanced technology is indistinguishable from magic' (Clarke, 1962). This notion often refers to the complexity and wonder of technology. This wonder, however, becomes problematic when dealing with the personal human experience. Although Snapchat's Privacy Policy is 'written in a way that's blissfully free of the legalese that often clouds these documents' (Snap Inc, 2019), the information is so diluted under the guise of accessibility that it neglects crucial details regarding the company's use of Emotional AI. By attempting to avoid industry jargon, the information is extremely simplified and the process of quantifying our emotions is perhaps conveniently left out. Arguably there is a 'desire to avoid a PR backlash' as the quantification of emotions has a 'creepy factor' (McStay, 2020, 9). Since Object Recognition technology does not identify an individual, perhaps it is justification for Snapchat's covert use of Emotional AI and subsequent lack of information surrounding it.

Figure 7: Snapchat Lenses [Screenshot] available at: https://www.snap.com/en-US/privacy/privacy-by-product Image shows the simple nature of Snapchat's information and reference to magic.

Figure 7: Snapchat Lenses [Screenshot] available at: https://www.snap.com/en-US/privacy/privacy-by-product Image shows the simple nature of Snapchat's information and reference to magic.

Hence, the following question arises: is 'emotional data' only problematic if it can identify an individual? Emotional data refers to information collected by an Emotional AI system. A UK survey finds that 43.1% of 18-24 year olds are 'OK' with data pertaining to their emotions being collected as long as it does not personally identify them (McStay, 2020, 9). Whereas only 13.1% of the age group are 'OK' with personal identification. In comparison, only 1.7% of over 65s said they are 'OK' with being identified by Emotion AI technology. The survey concludes that 'age is the sole factor in attitudinal differences in Emotion AI' (McStay, 2020, 9). Perhaps it is fair to speculate that younger age groups are indeed wary of Emotional AI practices while simultaneously being more open to emerging ways of engaging with technology. The laws and regulations on biometric data however make no reference to emotions. Biometric Data is defined by the EU as:

personal data resulting from specific technical processing relating to the physical, physiological or behavioural characteristics of a natural person, which allows to confirm the unique identification of that natural person such as facial images… (European Commission, 2016: 34)

This section refers to EU regulations on data privacy as they are considered the most rigorous worldwide. Article 9 requires explicit opt-in in cases where data can be used to identify a person. Snapchat does not require opt-in consent since their services do not personally identify users. Despite their stringency, EU regulations do not have clear guidelines for unidentifiable personal data. So if Snapchat claims to not identify users, where does that leave their emotional data? It is this opacity surrounding emotional data which sees the erasure of the individual.

The disconnect between user and emotional data is reminiscent of Duchenne's experiments. The neurologist established agency over the expressions of his models as he '[created the expression that [he wanted] as he [felt] it.' (Ekman and Boulogne, 1990). Consequently, the models were considered detached from their electrically-induced expressions. While Snapchat users have agency over how they interact with the app, they do not have agency over how their emotional data is handled - since it does not personally identify them. Emotions are intimate and sensitive and 'should be considered personal even where anonymisation techniques have been applied' (EDPS, 2015: 6). As Luciano Floridi describes: 'the "my" in "my data" is not the same "my" as in "my car", it is the same "my" as in "my hand"' (Floridi, 2016). Floridi's statement concurs with section Diminishing Dignity that we are more than inanimate objects. Our emotional data should not be treated as a material object, rather as a culmination of human experience which resides beyond our body. A protection of this data would be a protection of human dignity. And as Emotional AI begins to proliferate everyday interactions, a transparent, dignity-based approach is essential to understand the impact of Emotional AI on an individual.

Belligerent Binary

We are individuals first, but we must consider our existence as a fragment of a larger social body, one where society is an 'assemblage of the organisation, arrangement, relations, and connections of actualities, objects or organisms that seemingly appear as a functioning whole' (Dixon-Román, 2016, 484). Embracing this perspective of societies within societies calls for understanding the role of computation in society through a Western lens.2 Critical theorist Luciana Parisi has characterised this as the 'age of the algorithm' (2013). Evident now more than ever, algorithms are integral to how we interact with each other and how society interacts with us. This section considers algorithms as 'humanly designed and modelled thus a prosthetic tool to human cognition' (Dixon-Román, 2016, 485). A tool which reflects the values of its creator(s). If Emotional AI is designed by humans, then the algorithms inherit some form of those humans' values.

Belligerent Binary urges for thought beyond ourselves, beyond the erasure of dignity - discussed in section 1 - and instead adopts critical thought toward the implications of Emotional AI on underrepresented groups. How does Emotional AI affect the people it is not made by?

A divisive sentence occupies the Ethics page of pioneering computer vision company WeSee Ltd. It reads: 'Our mission…look[s] after the good guys and help[s] catch the bad' (WeSee, 2020). The company is dedicated to 'Safer society [and] safer business, through AI driven image, facial and emotional recognition' (WeSee, 2020). Section Operational Opacity will elaborate on the combination of facial and emotional recognition further; but for now, the focus remains on a belligerent binary. A binary which originates in the language used to commend an Emotional AI system. WeSee boasts of the ability to 'identify suspicious behaviour by recognising seven key human emotions through facial micro-expressions, eye movement, and further facial cues' (WeSee, 2020) indicating an Ekman-based approach to understanding emotion. The company also praises their efficacy of working with law enforcement agencies.

Drawing upon WeSee's crime-fighting rhetoric of 'looking after the good guys and helping catch the bad' (WeSee, 2020) a meta-ethical question arises: how can we formulate who is good and who is bad? The problematic motif serves as an emblem of WeSee's quest for implementing Emotional AI technology. Words like good and bad imply a gamut of emotions which either exhibit positive connotations or negative connotations. The limits of these two connotations form a problematic binary which structures the way people are labelled by an algorithm: as either good or bad. Furthermore, the masculine connotations of guy could suggest the technology is solely for recognising emotions amongst men.

A study titled Racial Influence on Automated Perceptions of Emotions (Rhue, 2018) finds Emotional AI recognises emotions differently based on race. Although Rhue's study does not involve WeSee technology it nonetheless divulges results which are apparent across the nascent field. Findings show more negative emotions were assigned to faces of Black individuals. The data set comprised of 400 images of NBA Basketball players [fig.8]. The reasoning for this was to provide a set of images of relatively similar age and gender which would ensure accuracy when testing two Emotional AI systems.

Figure 8: Example of NBA images used in data set for Rhue's study. Darren Collison (L), Gordon Hayward (R). Basketball Reference (2020) available at: https://www.basketball-reference.com/players/c/collida01.html https://www.basketball-reference.com/players/h/haywago01.html [accessed: 8 November 2020]

Figure 8: Example of NBA images used in data set for Rhue's study. Darren Collison (L), Gordon Hayward (R). Basketball Reference (2020) available at: https://www.basketball-reference.com/players/c/collida01.html https://www.basketball-reference.com/players/h/haywago01.html [accessed: 8 November 2020]

The study compares Face++ and Microsoft Face API. Both algorithms are globally commercial3 forms of Emotional AI which indicates wider society is likely to come into contact with these technologies. This is crucial when considering how Emotional AI can impact underrepresented groups. Face++ is twice as likely to label Black players as angry compared to white players. And Microsoft Face API reveals'Black players are viewed4 as more than three times as contemptuous as white players.' (Rhue, 2018, 3). The paper confirms algorithmic bias on an emotional dimension. Rhue concludes the study with a frustrating observation that 'professionals of color should exaggerate their facial expressions - smile more - to reduce the potential negative interpretations' (Rhue, 2018, 6). This inference illustrates how Emotional AI systems amplify existing power imbalances in our Western-centric society. Rhue's call for smiling more embodies the concept of "Animatedness" (Ngai, 2007) – an aging stereotype which perceives racialised individuals as either excessively or minimally emotional and expressive. My paper adopts the term racialised as 'racial logic that delineates group boundaries' (Sobrino and Goss, 2019). In a society where prejudice exists against racialised communities daily and on a systemic level it is perhaps no surprise that racial bias pollutes AI systems too.

Caption: Lauren Rhue's study "Racial Influence on Automated Perceptions of Emotions" motivated me to understand racial disparities in commercial forms of Emotional AI. A BIGGER SMILE is a visual essay exploring the crude historical and contemporary nature of these systems.

By generating a fake smile using styleGAN and over 400 Google images of people smiling, I was able to compare and contrast the inferences computers make about real and fake smiles. A computer generated voice of David Attenborough provides an uncanny narration throughout.

My visual essay attempts to introduce and interrogate the nature of Emotional AI to a wider audience through the medium itself – known as "interrogability", a term introduced to me by Sheena Calvert.

Operational Opacity

Perhaps an encouraging prospect on Emotional AI and its proximity to law enforcement is evident in Microsoft's announcement on June 11 2020 that it 'will not sell facial recognition technology to police departments in the United States until strong regulation, grounded in human rights, has been enacted.' (Microsoft, 2020). Recognising the need for revised ethical standards is fundamental when potentially implementing flawed systems. Unnervingly, in the UK, law enforcement agencies are perhaps more welcoming of Emotional AI technologies. This section identifies a lacuna in critical literature on Emotional AI and UK policing. Opacity surrounding information primarily begins with tech companies such as WeSee AI Ltd. The company claims to value transparency[fig.9] yet the site is riddled with opacity and reads:

Unlike other systems that are based on open-sourced frameworks, WeSee's unique smart approach to video classification combines machine and deep learning with the company's own proprietary rule-based algorithms, alongside specialists dedicated to data collating, sorting and tagging. (WeSee, 2020)

Figure 9: [Screenshot] available at: https://www.wesee.com WeSee AI Ltd website is embellished with phrases like Doing the right thing

Figure 9: [Screenshot] available at: https://www.wesee.com WeSee AI Ltd website is embellished with phrases like Doing the right thing

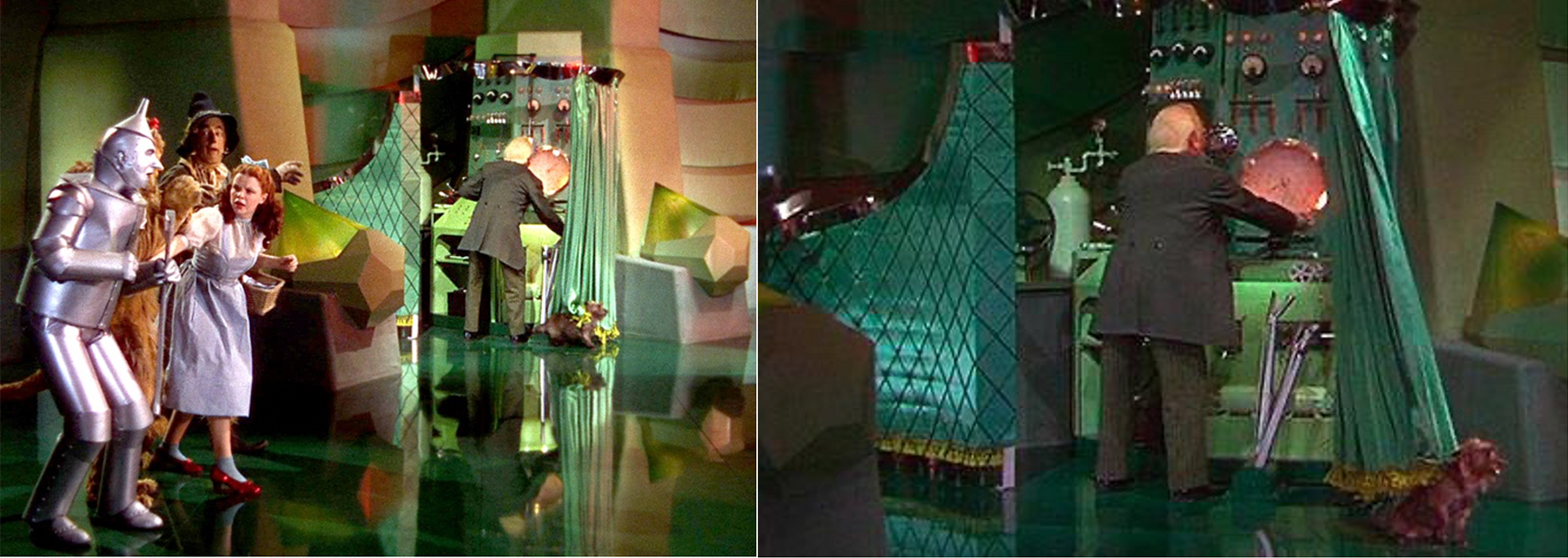

The closed source nature of WeSee software is an immediate form of obfuscation; a denial of information. Like Snapchat, opacity surrounds the intended function of the technology. Arguably, open-sourced software is not the epitome of transparency. This is evident in GPT2; a language AI system which generates text. The code requires sufficiently powerful hardware, and it is only navigable to one fluent in Java and C++ syntax. However, the overriding principle remains that open-sourced software is intended to be accessible. Crawford and Joler observe in the remarkable Anatomy of an AI System: 'wealth and power [are] concentrated in a very thin social layer' (2018, 9). Thus, perhaps the illusion of agency over software is strategic and serves to retain power to those who create the software. WeSee's lack of transparency perpetuates an existing power imbalance in society on the premise of refusing public access to information. Unlike the Snapchat patent discussed in Diminishing Dignity, there is no public patent to critically analyse here. So if we cannot grasp the "man behind the curtain" [fig.10], how can we trust this form of Emotional AI to 'deliver safety, security and integrity' (WeSee, 2020) through law enforcement?

Figure 10: The Wizard of Oz (1939) [screenshot] available at: http://www.tiaft.org/the-man-behind-the-curtain.htmlhttps://www.whollymindful.com/blog/embracing-the-man-behind-the-curtain The scene revealing the Wizard as a man behind the curtain is how I visualise Emotional AI and alludes to the mechanism behind how a system works. Behind every machine lies human intervention.

Figure 10: The Wizard of Oz (1939) [screenshot] available at: http://www.tiaft.org/the-man-behind-the-curtain.htmlhttps://www.whollymindful.com/blog/embracing-the-man-behind-the-curtain The scene revealing the Wizard as a man behind the curtain is how I visualise Emotional AI and alludes to the mechanism behind how a system works. Behind every machine lies human intervention.

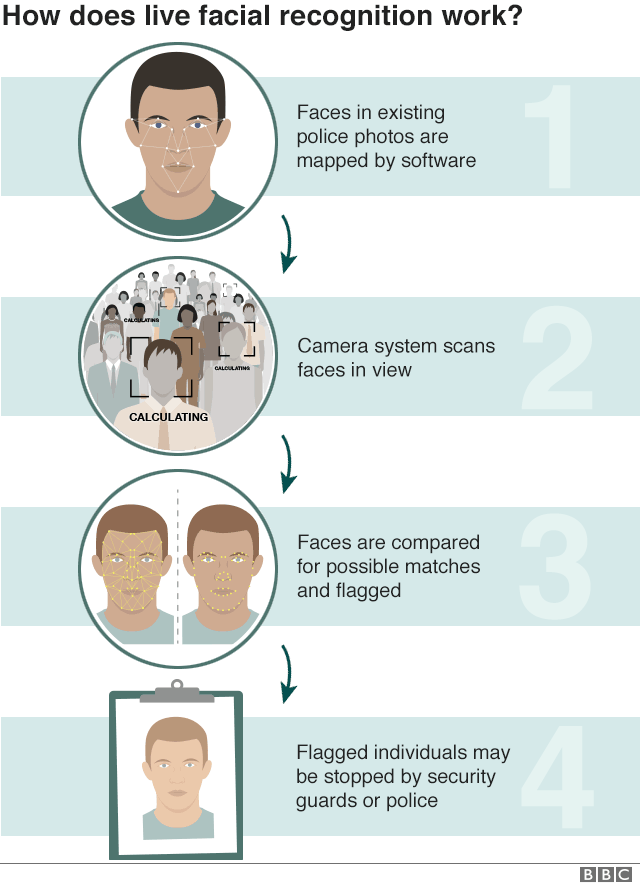

Opacity extends beyond software companies. Lincolnshire Police and Crime Commissioner, Marc Jones, has secured funding for the integration of Emotional AI technologies (Fletcher, 2020). Although 'the force has not decided what searches it will use, nor what supplier provide the system.' (Fletcher, 2020), based on recent police use of Live Facial Recognition across the UK, we can scrutinise the potential permeation of Emotional AI. Live Facial Recognition5 was deployed by London Metropolitan Police in February 2020. Described by UK Biometrics Commissioner, Paul Wiles, as 'not yet fit for use' (BigBrotherWatch, 2020)the technology aims to 'stop dangerous people who are wanted for serious criminal offences' (Metropolitan Police, 2019).

Figure 11: The process of LFR. BBC [screenshot] available at: https://www.bbc.co.uk/news/uk-england-london-46584184 [accessed: 4 November 2020]

Figure 11: The process of LFR. BBC [screenshot] available at: https://www.bbc.co.uk/news/uk-england-london-46584184 [accessed: 4 November 2020]

Perhaps at first glance LFR seems like an efficient way to enforce the law. However, in 2018 only two out of 104 people stopped were confirmed to be on the Watchlist. This concludes a 98% error rate (Metropolitan Police, 2018). A 2019 NIST study reports commercial systems of facial recognition - such as the one employed by London Met - are racially biased (NIST, 2019). In particular, Black women are least likely to be identified correctly with conclusive results that women are two to five times less accurately recognised (NIST, 2019, p. 10). Although not specifically used to identify emotions, LFR closely correlates to Emotional AI as the two systems deal with recognising human faces. '[Emotional AI is] layered on top of facial-recognition systems as a 'value add.'' (Crawford et al., 2019) and given the discriminatory nature of LFR, one can posit that Emotional AI inherits the same bias. The propensity to misidentify Black individuals reinforces the over-policing of communities and institutional racism extant in our society. In the "age of the algorithm" (Parisi, 2013) how can we consider implementing Emotional AI when earlier forms of facial technology remain demonstrablyflawed? The flaws have been proven to exclude Black individuals from accurate recognition, thus current police implementation of Emotional AI appears very premature. Rather than chasing bespoke advancements in Emotional AI, we will now look to where power lies in order to identify, understand and dismantle the origin of algorithmic bias.

Flawed Feedback Loop

Power affects 'how AI companies work, what products get built, who they are designed to serve, and who benefits from their development' (West et al., 2019). This section expands on how industry homogeny encourages a feedback loop of discrimination.

Although bias pervades the algorithms used for Emotional AI it is important to consider that an algorithm is not innately biased. An algorithm serves to perform a series of mathematical steps and engage with data. It is amid data, that the erasure of intersectionality on a computational level becomes apparent. Intersectionality is an analytical framework which refers to social and political identities - the term mainly encompasses gender and race for the purpose of this paper. Ezekiel Dixon-Román postulates:

regardless of the initial code of the algorithm, as it intra-acts with myriad persons and algorithms and analyses and learns from the data, the ontology of the algorithm becomes a racialised assemblage. (Dixon-Román, 2016, 488)

If 'myriad persons' are overwhelmingly White6 and identify as male, then the algorithm embodies this homogeny. Thus, in the context of Emotional AI, the homogeny of 'myriad persons' - established as White men - affects the range of images in data sets. Therefore, as an algorithm 'analyzes and learns from the data' (Dixon-Román, 2016, 488) it becomes racialised White. Hence, Emotional AI is less equipped to accurately recognise the faces and emotions of individuals outside the White racial frame. For example, Nikon's camera software wrongly identified East Asian users as blinking in their photographs [fig.12]. While not an implicit example of Emotional AI, Nikon's technology deals with recognising the human face and as established, Emotional AI is considered an add-on to existing facial technology. Since Emotional AI 'digests pixels from a camera in dictated ways' (Buolamwini and Gebru, 2018) the pixels are dictated profusely White and male – thus propagating the gaze of those who develop the algorithm. It is the lack of diversity in data sets which begins to racialise Emotional AI as White and sees the erasure of intersectionality on a computational and industry level.

Figure 12: Both images show the shocking flaw in Nikon's facial recognition technology. Joz Wang (2010) [screenshot] available at: http://content.time.com/time/business/article/0,8599,1954643,00.html

Figure 12: Both images show the shocking flaw in Nikon's facial recognition technology. Joz Wang (2010) [screenshot] available at: http://content.time.com/time/business/article/0,8599,1954643,00.html

'Bias is not just in our datasets, it's in our conferences and community' (Merity, 2017). Whiteness dominates the industry at all levels. Men account for an overwhelming 90% of AI research staff at Google (Simonite, 2018). Only 2.5% of the company's full-time workers are Black (Google, 2018). It is this disturbing asymmetry of power which fuels existing patterns of discrimination to continue at every level of industry. A deeply concerning and saddening case of industry homogeny occurred at the beginning of December 2019, when AI Ethics researcher, Dr Timnit Gebru, was fired from her position as Google's co-leader of Ethical AI. Gebru's research paper exposing language bias in a Google algorithm was denied and retracted by the firm. Gebru stated 'Your life starts getting worse when you start advocating for underrepresented people. You start making the other leaders upset' (Metz and Wakabayashi, 2020). The complete denial of bias by Google embodies the operational opacity within the industry and the incident exemplifies the company's utilitarian vision of AI. Google's lack of commitment to mitigating algorithmic bias amplifies discrimination.

Reluctant to acknowledge how their technology disenfranchises underrepresented groups, '[Google] are not only failing to prioritize hiring more people from minority communities, they are quashing their voices [too]' (Metz and Wakabayashi, 2020).

With Emotional AI increasingly saturating political institutions, education, healthcare, social media, advertising and law enforcement; it is imperative to destabilise this flawed feedback loop of exclusion. Research into the diversity crisis of the AI sector needs to go beyond diversifying data sets. Perhaps debiasing systems demands a wider social analysis, beginning with conversations about AI which place underrepresented groups at the forefront, acknowledging that intersectionality shapes people's experiences with AI.

Monochrome Mainstream

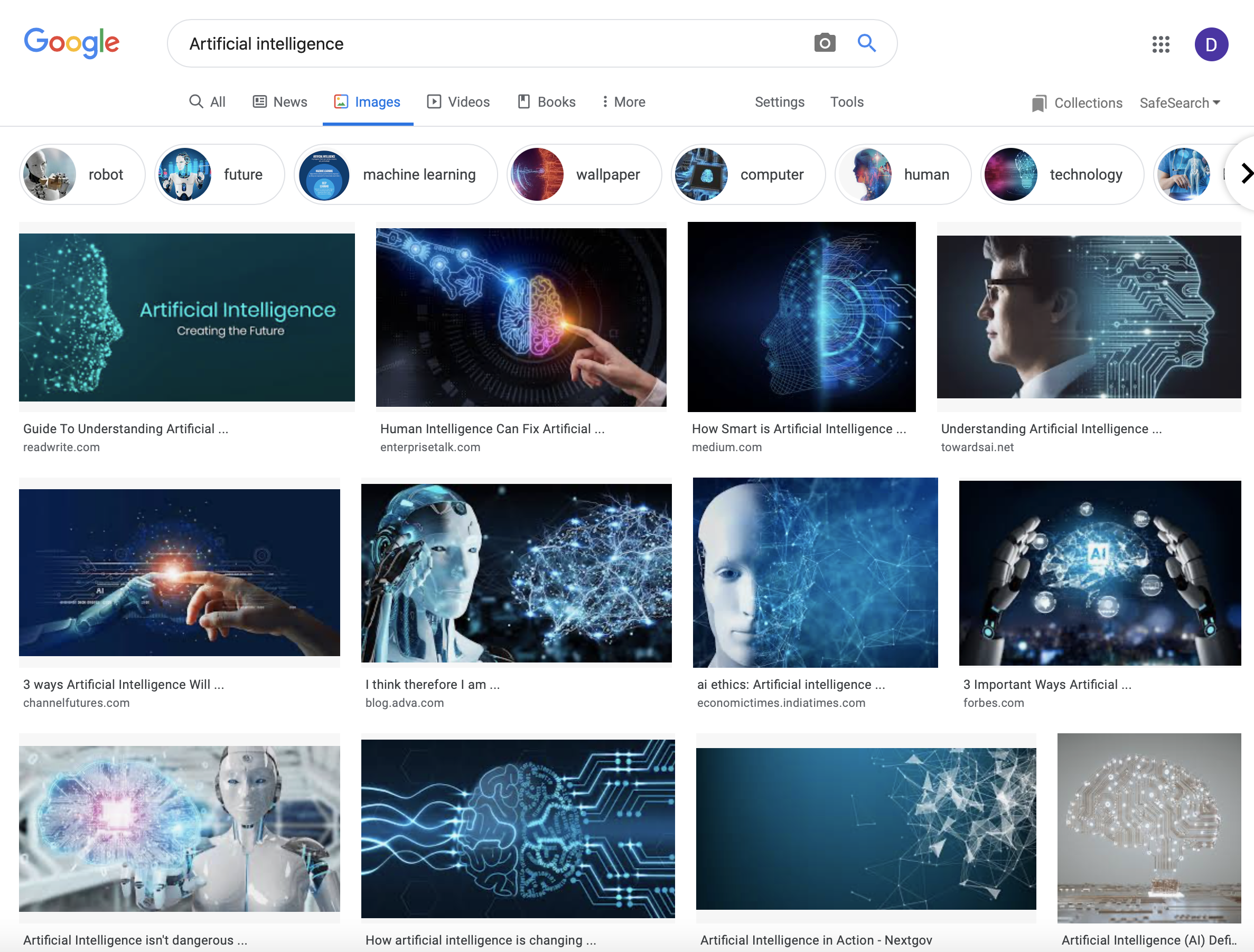

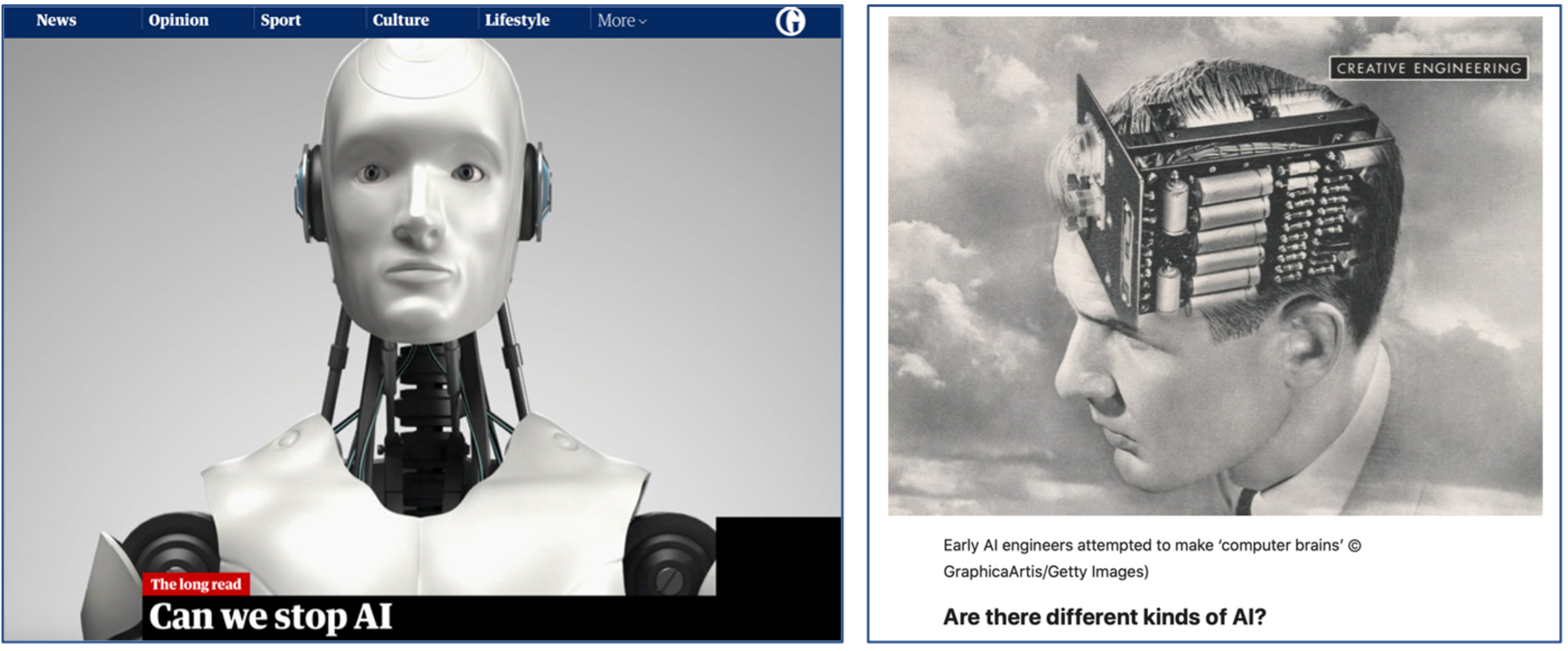

Industry homogeny also pervades the mainstream portrayal of AI. A quick Google search of the term AI produces images which are overwhelmingly white in regard to the physicality of materials [fig.13]. The anthropomorphism of AI as White is apparent across media outlets too [fig.14]. Indeed 'The white racial frame is so institutionalised that all major media outlets operate out of some version of it' (Feagin, cited in Cave and Dihal, 2020)7. This section postulates that the 'often unnoticed and unremarked-upon fact that intelligent machines are predominantly conceived and portrayed as White.' (Cave and Dihal, 2020) is unnoticed by those who are indeed, White. The 'monopoly that whiteness has over the norm' (Ganley, cited in Cave and Dihal, 2020) - in this case the representation of AI in mainstream media - perpetuates an attitude of colour-blindness. Not noticing the Whiteness of AI in the media is a privilege exclusive to White viewers. 'Communities of color frequently see and name whiteness clearly and critically, in periods when white folks have asserted their own 'color-blindness'' (Frankenberg, cited in Cave and Dihal, 2020). This attitude of not seeing race is directly imprinted into Emotional AI as algorithms quite literally consistently fail to accurately see more than one race. Thus, an asymmetry of power in AI is exacerbated both on a technological level and social level.

Figure 13: Google Image search of Artificial Intelligence (2020) [Screenshot]

Figure 13: Google Image search of Artificial Intelligence (2020) [Screenshot]

Figure 14: Online article images of AI, again they are overwhelmingly White and white. Science Picture CO/Getty (2019) [screenshot] Available at: https://www.theguardian.com/technology/2019/mar/28/can-we-stop-robots-outsmarting-humanity-artificial-intelligence-singularity GraphicaArtis (2017) [screenshot] Available at: techcrunch

Figure 14: Online article images of AI, again they are overwhelmingly White and white. Science Picture CO/Getty (2019) [screenshot] Available at: https://www.theguardian.com/technology/2019/mar/28/can-we-stop-robots-outsmarting-humanity-artificial-intelligence-singularity GraphicaArtis (2017) [screenshot] Available at: techcrunch

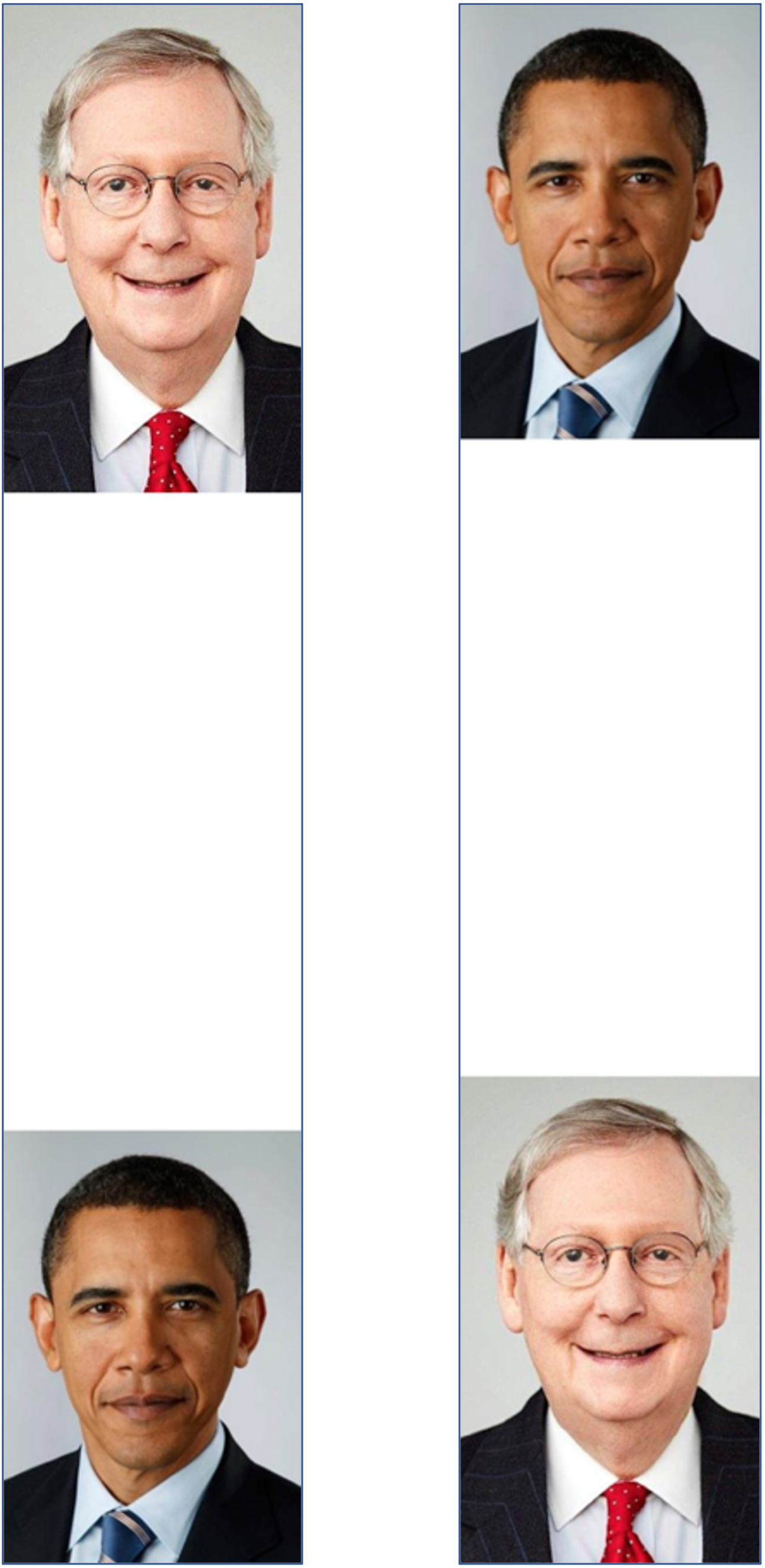

Recently evident in Twitter's image-cropping algorithm, users found the feature would visually prioritise White faces over Black faces [fig.15, 16]. The incident illustrates how racial bias did not go unnoticed by diverse users. In order to unify the interface of the feed and allow for multiple images to be tweeted at once, the app employs an algorithm which 'decides how to crop images before bringing it to Twitter' (Twitter, 2020). Twitter's quest for providing visual harmony to user's phones established a visual hierarchy of White images as the focal point; pushing Black images to be hidden beyond the crop lines [fig.17].

Figure 15: This is how the tweet appears on user's timelines after cropping by Twitter's algorithm. There are two equally sized images within the bounds provided by Twitter. Please refer to the following page to see the original images posted, prior to cropping. @bascule (2020) [screenshot] Available at: https://twitter.com/bascule/status/1307440596668182528?s=20

Figure 15: This is how the tweet appears on user's timelines after cropping by Twitter's algorithm. There are two equally sized images within the bounds provided by Twitter. Please refer to the following page to see the original images posted, prior to cropping. @bascule (2020) [screenshot] Available at: https://twitter.com/bascule/status/1307440596668182528?s=20

Figure 16: Here are the two original images prior to cropping. It is evident that Twitter's algorithm prioritised the image of Mitch McConnell over Barack Obama. @bascule (2020) [screenshot] Available at: https://twitter.com/bascule/status/1307440596668182528?s=20

Figure 16: Here are the two original images prior to cropping. It is evident that Twitter's algorithm prioritised the image of Mitch McConnell over Barack Obama. @bascule (2020) [screenshot] Available at: https://twitter.com/bascule/status/1307440596668182528?s=20

Figure 17: More evidence of Twitter algorithm cropping an image of a Black man outside of the frame. @NotAFile (2020) [screenshot]

Figure 17: More evidence of Twitter algorithm cropping an image of a Black man outside of the frame. @NotAFile (2020) [screenshot]

The feature echoes the belligerent binary discussed in part one of this chapter and despite claims of thorough testing for bias, the app stated, 'There's lots of work to do, but we're grateful for everyone who spoke up and shared feedback on this' (Twitter, 2020). The example reinstates that Whiteness as the norm does not go unnoticed by underrepresented groups, in this case, the diverse users of Twitter. Furthermore, 'if Black or minority groups aren't involved in decision processes, they may have true and valid perspectives that aren't making it into the authoritative message' (Ó hÉigeartaigh, 2020) therefore the 'oversight' (Twitter, 2020) stems from industry homogeny.

Monochrome Mainstream emphasises the importance of acknowledging intersectionality and underrepresented groups when discussing, creating and using AI, and thus Emotional AI. By enabling minority communities to amplify their voices and concerns surrounding algorithmic bias, Twitter improved its software - perhaps this is what the beginning of what a healthy feedback loop looks like.

Conclusion

Tracing the entanglement of Emotional AI technology at various scales of impact has allowed me to critically probe different stages of the field. I have argued that Emotional AI is normative, laden with discriminatory error and riddled with opacity. From a perspective at a micro level, my first section saw the impact of Emotional AI on an individual. Diminishing Dignity gave focus to Snapchat's covert implementation of Emotional AI and argued that reducing our human experience to a number sees the erasure of human dignity. While Magic Matters asserted the need for transparency surrounding the language of data and technology, arguing that emotional data should be considered an extension of our physical body. Drawing particular focus to the impact of Emotional AI on racialised communities allowed for a meso-level evaluation of implementing the technology. Belligerent Binary exhibited the racial bias prevalent in algorithms; arguing that computational prejudice exacerbates existing power imbalances in society. Operational Opacity critiqued the use of Emotional AI in law enforcement as a premature add-on to failing facial recognition systems. While the Flawed Feedback Loop illustrated how algorithmic bias originates from industry homogeny. Monochrome Media saw the erasure of racialised groups from mainstream media portrayals of AI arguing that colour-blindness amplifies an asymmetry of power in AI both on a technological and social level.

Tackling industry bias should have the fundamental goal of making just systems. For example, Twitter's revision of the cropping algorithm should commit to eliminating image hierarchy based on skin-tone; and I look forward to seeing improvements in the platform's technological performance [fig.19]. On the other hand, Google's AI Ethics Board – which lasted less than 10 days (Wakefield, 2019) – is an example of a performative intervention which evidently had little commitment to mitigating algorithmic bias. Therefore, while performing justly on a technological level is important, AI Ethics should be equally non-performative, as opposed to theatrical, arguably ingenuine and unfulfilling their intentions. This notion of non-performativity – the idea that performance should be achieved in a non-performative way – draws from the writing of Sara Ahmed and Judith Butler. First applied to AI Ethics by Anna Lauren Hoffmann, I am keen to explore non-performativity in relation to the language and treatment of our emotional data.

As Emotional AI systems increasingly pervade the '[distribution of] opportunities, wealth, and knowledge' (Crawford and Joler, 2018) this paper is a way to begin to understand them. With transparency, dignity and inclusivity at the forefront of dialogue and action, we can optimise the type of future we want.

Figure 18: Screenshot of my tweet. I posted the same two images seen in Figure 17 to see if Twitter had updated its' software. I will be keeping an eye on any developments. @dejana_draganic (2020) [screenshot]

Figure 18: Screenshot of my tweet. I posted the same two images seen in Figure 17 to see if Twitter had updated its' software. I will be keeping an eye on any developments. @dejana_draganic (2020) [screenshot]

BIBLIOGRAPHY

Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., & Pollak, S. D. (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Psychological Science in the Public Interest, 20, 1–68. doi:10.1177/1529100619832930 Available at: https://journals.sagepub.com/stoken/default+domain/10.1177%2F1529100619832930-FREE/pdf [accessed: 8 November 2020].

Belfield, H. (2020) Activism by the AI Community. Center for the study of Existential Risk, University of Cambridge, UK. [accessed: 13 February 2021].

Ben Shneiderman Human-Centered AI: Reliable, Safe and Trustworthy Dyson School of Design Engineering. 7 February 2021. [youtube].

Bridle, J. (2019). New Dark Age: Technology and the End of the Future.

BigBrotherWatch. (2020) Stop Facial Recognition. [online] available at: https://bigbrotherwatch.org.uk/campaigns/stop-facial-recognition/ [last accessed 27 November 2020].

Buolamwini. J, Gebru. T. (2018) Gender shades: Intersectional accuracy disparities in commercial gender classification. Conference on Fairness, Accountability and Transparency. [online] available at: http://proceedings.mlr.press/v81/buolamwini18a/buolamwini18a.pdf [accessed: 28 November 2020].

Cambridge: Cambridge University Press (Studies in Emotion and Social Interaction), pp. 270–284. doi: 10.1017/CBO9780511752841.027.

Cave. S, Kanta, D. (2020) The Whiteness of AI. Available at: https://doi.org/10.1007/s13347-020-00415-6 [accessed: 28 November 2020].

Chang, S. (2015). Determining a mood for a group. United Staten Patent no: US 10,061,977 B1. Available at: https://patents.google.com/patent/US10061977B1/en [online][accessed: 13 October 2020].

Cherry, K. (2020) The Purpose of our Emotions: How our feelings help us survive and thrive. VeryWellMind [online] Available at: https://www.verywellmind.com/the-purpose-of-emotions-2795181 [accessed: 28 September 2020].

Crawford. K, Joler. V (2018) Anatomy of an AI system. Available at: https://anatomyof.ai [accessed 28 November 2020].

Crawford, Kate, Roel Dobbe, Theodora Dryer, Genevieve Fried, Ben Green, Elizabeth Kaziunas, Amba Kak, Varoon Mathur, Erin McElroy, Andrea Nill Sánchez, Deborah Raji, Joy Lisi Rankin, Rashida Richardson, Jason Schultz, Sarah Myers West, and Meredith Whittaker. AI Now 2019 Report. New York: AI Now Institute (2019) Available at: https://ainowinstitute.org/AI_Now_2019_Report.html [accessed 28 November 2020].

Cukier, K. and Mayer-Schönberger, V. (2013) Big Data: A Revolution That Will Transform How We Live, Work, and Think. John Murray.

Darwin, C. (1872) Expression of the Emotions in Man and Animals. John Murray

Dixon-Román, E. (2016) Algo-Ritmo: More-Than-Human Performative Acts and the Racializing Assemblages of Algorithmic Architectures. SAGE Publications.

Ekman, P. and Duchenne de Boulogne, G.-B. (1990) "Duchenne and facial expression of emotion," in Cuthbertson, R. A. (ed.) The Mechanism of Human Facial Expression.

Elisa Giardina-Papa. Racialising Algorithms. Recursive Colonialism [youtube].

European Commission (2016) REGULATION (EU) 2016/679 OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation). Available at: ec.europa.eu/justice/data-protection/reform/files/regulation_oj_en.pdf [accessed: 7 November 2020].

European Data Protection Supervisor (2015) Opinion 4/2015 Towards a new digital ethics Data, dignity and technology. Available at: https://edps.europa.eu/sites/edp/files/publication/15-09-11_data_ethics_en.pdf [accessed: 8 November 2020].

Feagin, J. (2010) The White Racial Frame: Centuries of Racial Framing and Counter-Framing. Routledge: New York.

Fisher, M. (2009). Capitalist Realism: Is There No Alternative? ZeroBooks.

Fletcher, N. (2020) Lincolnshire Police to trial new CCTV tech that can tell if you're in a mood. Lincolnshire Live. [online] available at: https://www.lincolnshirelive.co.uk/news/local-news/new-lincolnshire-police-cctv-technology-4431274 [accessed: 28 November 2020].

Floridi, L (2016) On human Dignity as a foundation for the right to privacy. Philosophy and technology29: 307-312.

Floridi, L (2014) Open data, data protection, and group privacy. Philosophy and technology27: 1-3.

Foucault, M. (1977). Discipline and Punishment. London: Tavistock.

Foucault, M. (1970) The Order of Things: An Archaeology of the Human Sciences. Travistock Publications UK.

Frankenberg, R. (1997) Introduction: local whiteness, localizing whiteness. In Displacing whiteness. Duke University Press. Available at: https://doi.org/10.1215/9780822382270-001 [accessed: 28 November 2020].

Gabriel, L. (2020) Artificial Intelligence, Values and Alignment. Deep Mind. [accessed: 28 December 2020].

Google. (2018) Google Annual Diversity Report 2018. Available at: https://static.googleusercontent.com/media/diversity.google/en//static/pdf/Googel_Diversity_annual_report_2018.pdf, [accessed: 28 November 2020].

Hu, T. Papa, E. (2020) How AI manufactures a Smile: Tung-Hui Hu interviews Artist Elisa Giardina Papa on Digital Labor.__Media-N. The journal of the New Media Caucus. Spring 2020. Volume 16, Issue 1, Pages 141-150. ISSN: 1942-017X.

Kant, I. Groundwork of the Metaphysics of Morals. Cambridge: Cambridge

University Press, 1997. M. Gregor, translator; C.M. Korsgaard, editor.

Lex Fridman Podcast, Max Tegmark: Life 3.0 (2018).

Lex Fridman Podcast, Rosalind Picard: Affective Computing, Emotion, Privacy, and Health (2019).

McStay, A. (2018) Emotional AI: The Rise of Empathic Media. Sage.

Merity, S. (2017). Bias is not just in our datasets, it's in our conferences and community. [online] available at: https://smerity.com/articles/2017/bias_not_just_in_datasets.html [accessed 28 November 2020].

Metropolitan Police (2018), Freedom of Information Request. [online] available at: https://www.met.police.uk/SysSiteAssets/foi-media/metropolitan-police/disclosure_2018/april_2018/information-rights-unit---mps-policies-on-automated-facial-recognition-afr-technology [accessed: 15 August 2020].

Metropolitan Police. (2019) MPS LFR GUIDANCE DOCUMENT. Guidance for the MPS Deployment of Live Facial Recognition Technology [online] Available at:https://www.met.police.uk/SysSiteAssets/media/downloads/force-content/met/advice/lfr/mpf-lfr-guidance-document-v1-0.pdf [accessed: 26 November 2020].

Metz, C. Wakabayashi, D. (2020) Google Researcher Says She Was Fired Over Paper Highlighting Bias in A.I. The New York Times. Available at: https://www.nytimes.com/2020/12/03/technology/google-researcher-timnit-gebru.html. [online] available at: https://smerity.com/articles/2017/bias_not_just_in_datasets.html[accessed: 24 November 2020].

Meyers, T. (2018) Why our facial expressions don't reflect our feelings. BBC News. Available at: https://www.bbc.com/future/article/20180510-why-our-facial-expressions-dont-reflect-our-feelings. [accessed: November 24 2020].

Microsoft (2020) What is the Azure Face Service? [online] available at: https://docs.microsoft.com/en-us/azure/cognitive-services/face/overview. [accessed: 24 November 2020].

NIST. (2019) Face Recognition Vendor Test (FRVT) Part 3: Demographic Effects. Available at: https://nvlpubs.nist.gov/nistpubs/ir/2019/NIST.IR.8280.pdf [accessed 27 November 2020].

Noah Levenson: Stealing Ur Feelings. Tate Modern. September 2019.

O'Brien, T. (2014) Human Dignity in a Technological Age. Good Business: Catholic Social Teaching at Work in the Marketplace. Available at: https://anselmacademic.org/wp-content/uploads/2016/05/7059_Chapter_1.pdf [accessed: 2 January 2021]

Pantic, M (2018) This is why emotional artificial intelligence matters. TEDxCERN. [online] Available at: https://tedxcern.web.cern.ch/node/618 [accessed 3 November 2020].

Parisi, L. (2013) Contagious architecture: Computation, aesthetics and space. Cambridge. The MIT Press.

Paul Ekman Group (2018) Dr.Ekman explains FACS. Paul Ekman Group. [online] Available at: https://www.youtube.com/watch?v=6RzCWRxnc84 [accessed 3 November 2020].

Paul Ekman Group, (2020) Universal Emotions [online]. Available at: https://www.paulekman.com/universal-emotions/ [accessed 8 November 2020].

Piccard, R. (1997) Affective Computing. MIT Press.

Rhue, L. (2018) Racial Influence on Automated Perceptions of Emotions. Available at: https://ssrn.com/abstract=3281765 [accessed: 27 November 2020].

Picard, R. (2015) MAS360: Affective Computing [powerpoint presentation] Available at: https://ocw.mit.edu/courses/media-arts-and-sciences/mas-630-affective-computing-fall-2015/lecture-notes/MITMAS_630F15_Weeks1-3.pdf [online][accessed: 16 October 2020].

Rhue, L. (2018) Racial Influence on Automated Perceptions of Emotions. Available at: https://ssrn.com/abstract=3281765 [last accessed: 27 November 2020].

Sattiraju, N. (2020) Google Data Centers' Secret Cost: Billions of Gallons of Water. Bloomberg. Available at: https://www.bloomberg.com/news/features/2020-04-01/how-much-water-do-google-data-centers-use-billions-of-gallons. [accessed: 28September 2020].

Seán Ó hÉigeartaigh and Olle Häggström. AI ethics online: A conversation on AI governance and AI risk. 15 December 2020. [youtube].

Simonite, T. (2018) AI is the future - but where are the women? WIRED. [online] available at: https://www.wired.com/story/artifical-intelligence-research-gender-imbalance/ [accessed 28 November 2020].

Snap Inc. (2020a) Privacy by Product [online] Available at: https://www.snap.com/en-GB/privacy/privacy-by-product/ [accessed 8 November 2020].

Snap Inc. (2019) Code of Conduct. [online] Available at: https://s25.q4cdn.com/442043304/files/doc_downloads/gov_doc/code-of-conduct-02012019.pdf [accessed 8 November 2020].

Snyder, P. J., Kaufman, R., Harrison, J., & Maruff, P. (2010). Charles Darwin's emotional expression "experiment" and his contribution to modern neuropharmacology. Journal of the history of the neurosciences, 19(2), 158–170. https://doi.org/10.1080/09647040903506679

Sobrino, B. Goss, D. (2019) The Mechanisms of Racialization Beyond the Black/White Binary. Routledge

Wakefield, J.(2019) Google Ethics Board Shutdown. BBC News [online] Available at: https://www.bbc.co.uk/news/technology-47825833 [accessed: 6 November 2020]

WeSee AI Ltd. (2020) [online] available at https://www.wesee.com [accessed 27 November 2020].

West, S.M, Whittaker. M and Crawford, K. (2019) Discriminating Systems: Gender, Race, and Power in AI. AI Now Institute. Available at: https://ainowinstitute.org/discriminatingsystems.html [accessed: 28 November 2020].

Wiewiórowski, W (2019) Facial recognition: A solution in a search for a problem? European Data Protection Supervisor. Available at: edps.europa.eu/node/5551 [accessed: 8 November 2020].

Williams, T.D. (2005). Who is my neighbor? Personalism and the foundations of human rights . Washington, DC: The Catholic University of America.

Zuboff, S. (2019) The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. Profile Books Ltd.

- Dignity originates from the Latin dignus meaning worthy. The word Dignity is defined by the Oxford Dictionary as 'the state or quality of being worthy of honour or respect.' The term originally implied that someone is deserving of respect based on their status. In 1948 the term human dignity was recognised, and thus dignity was redefined as inherently and universally human – regardless of class, gender, race, abilities, religion etc.↩

- The term Western here is used to geographically focus on AI implementation across Europe and the US. The governance of AI in China, Russia and emerging markets is beyond the scope of this essay however it is imperative to understand that globally there are different priorities for implementing AI.↩

- Face++ is based in Beijing and provides image recognition and deep-learning technology for the public sector including mobile app payment methods, public safety, retail and law enforcement. The company was valued at $4billion in 2019.↩

- To reiterate, an Emotional AI system cannot view or see emotions, however it can detect, classify and make inferences from users' facial expressions.↩

- LFR works by comparing passing faces in real-time against a 'Watchlist' of known people who have committed a crime [fig.11]. If the camera detects a match, then officers are instructed to stop the suspect.↩

- It is important to note that this penultimate section varies from earlier sections with the capitalisation of the word White. Although not widely used, the capitalisation of White refers to ethnicity and not colour - this distinction was made apparent in The Whiteness of AI by Stephen Cave and Kanta Dihal (2020).↩

- Institutionalised in this context alludes to the deeply imbedded images of Whiteness as the norm in our Western-centric society.↩